Anti-Bias Resources

Dr. Carl Ehrett, July 03, 2020

This post includes resources related to social bias in machine learning, artificial intelligence and data science. These resources are divided into two groups:

- Sources for learning about problems of algorithmic bias related to artificial intelligence, machine learning and data science (AI/ML/DS)

- Guides/examples of how you can use AI/ML/DS to help identify and fight bias. You can use your analytical skills to identify bias where it might otherwise have escaped notice, or apply the tools and techniques here to make sure your own models are free of algorithmic bias.

Much of this list is based on a presentation by Watson-in-the-Watt intern Cierra Oliveira; that presentation can be viewed here.

Sources describing problems of algorithmic bias

Supposedly ‘Fair’ Algorithms Can Perpetuate Discrimination: How the use of AI runs the risk of re-creating the insurance industry’s inequities of the previous century. A look into how AI methods can wind up implementing ‘redlining’ techniques that disproportionately harm minority communities.

Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. A very comprehensive article about algorithmic discrimination and techniques for avoiding it. Full of references to other useful sources.

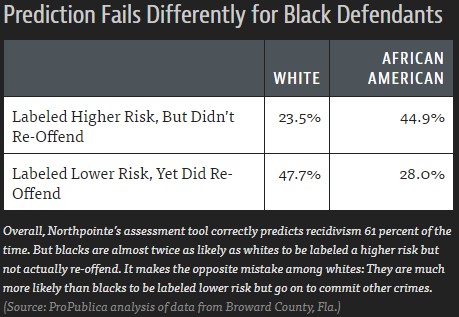

Machine Bias: ProPublica’s famous investigation discovering racial bias in AI used for making decisions related to bail and criminal sentencing.

Some essential reading and research on race and technology. This is another collection of articles, reports and books relevant to algorithmic discrimination.

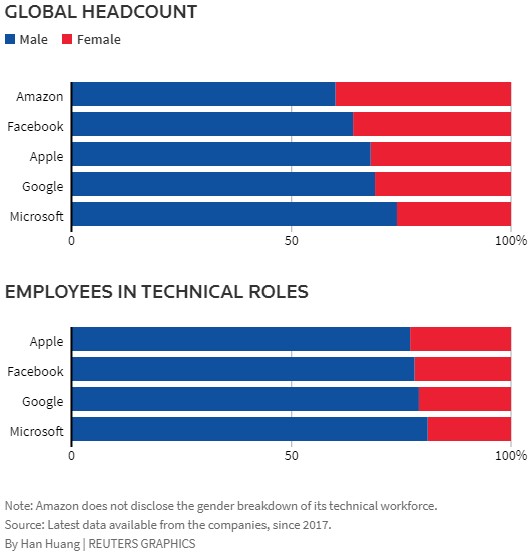

Amazon scraps secret AI recruiting tool that showed bias against women. A news article that illustrates how a company – in this case Amazon – can find itself relying on discriminatory AI.

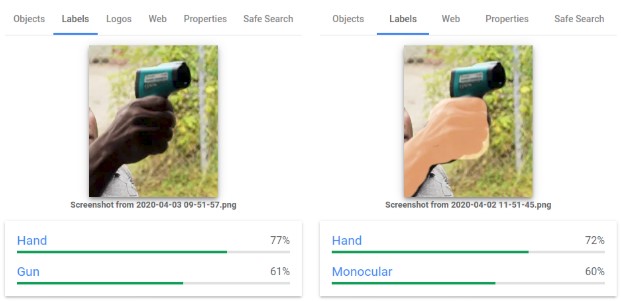

Google apologizes after its Vision AI produced racist results. An example of the sort of algorithmic bias that can manifest in visual recognition AI: “AlgorithmWatch showed that Google Vision Cloud, a computer vision service, labeled an image of a dark-skinned individual holding a thermometer “gun” while a similar image with a light-skinned individual was labeled “electronic device”.

Ethics, Bias, and Fairness in AI - A TWIML Playlist collects episodes of Sam Charrington’s TWIML podcast related to both algorithmic bias and to using AI to fight for justice.

Guides and examples: using AI/ML/DS to uncover bias

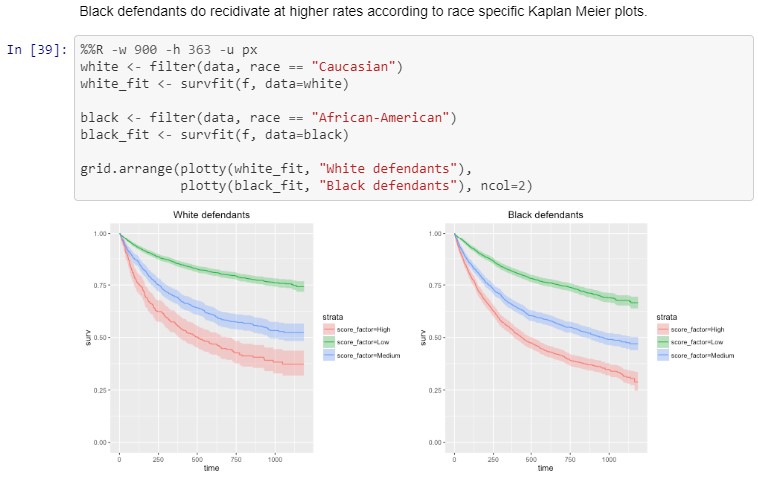

An analysis of racial bias in criminal sentencing. This excellent resource is a Jupyter notebook that walks through the ProPublica analysis described in the article linked above. You can follow along in Python to see how they drew their conclusions that the Compas recidivism prediction AI included racial bias, and/or adapt their code to your own purposes.

How to create a 3D time-series map using Python and Kepler.gl. This is a useful guide for working with maps; it may help you make use of data you find through the other links listed here or elsewhere. The focus of this tutorial is on producing an interactive 3D map of police interactions resulting in death.

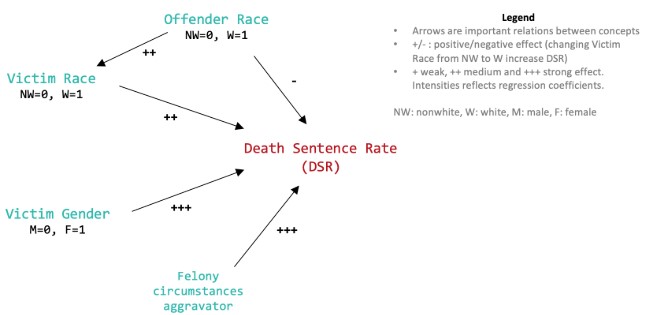

Death Sentences and Race-Ethnicity Biases: When data shows that black lives literally mattered less. This article walks through an analysis of criminal sentencing data to support the conclusion that homicides against white victims receive harsher punishment than homicides against black victims.

Racial Equity Tools “offers tools, research, tips, curricula and ideas for people who want t oincrease their own understanding and to help those working toward justice at every level”. Of particular interest for AI/ML/DS purposes is their data page, which collects a wide array of data sources that you can use to begin your own analysis.

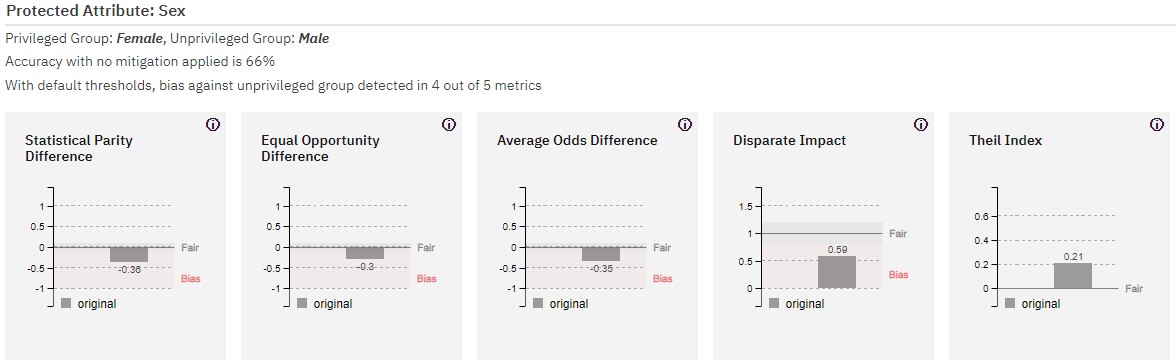

IBM’s AI Fairness 360 is a “comprehensive set of fairness metrics for datasets and machine learning models, explanations for these metrics, and algorithms to mitigate bias in datasets and models.” Their demo is a helpful way to explore the application of their tools to datasets such as that used in the ProPublica Compas analysis.

The Aequitas Bias and Fairness Toolkit similarly provides a set of tools for detecting and mitigating bias in models. They also provide a demo notebook focusing on the ProPublica Compas dataset.

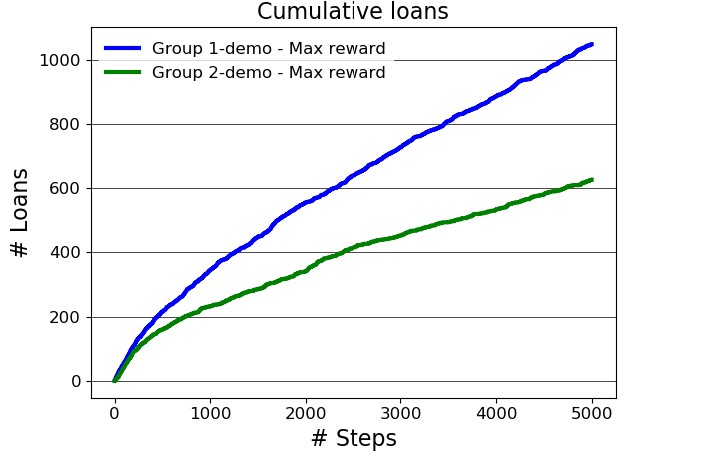

Google’s ML Fairness Gym: “a set of components for building simple simulations that explore the potential long-run impacts of deploying machine learning-based decision systems in social environments.” These tools help one explore the ways in which AI/ML models can have unintended, unexpected, and unjust outcomes. Their quick start guide walks one through an application of the tools to an ML lending model.

TensorFlow Fairness Indicators is a tool for fairness visualization. The tool “enables easy computation of commonly-identified fairness metrics for binary and multiclass classifiers.” They include a helpful example Colab notebook which walks one through an application of the tool.